Executive Report: The Case for an AI Compute Grid

7/19/20252 min read

Prepared by: AI Grid Alliance (AIGA)

Date: July 2025

1. Executive Summary

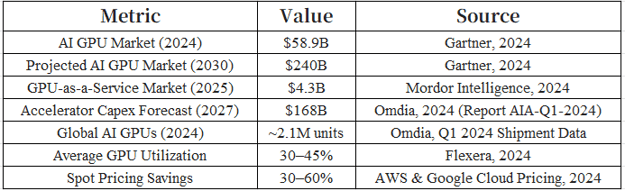

AI workloads are driving unprecedented demand for GPU compute, yet enterprise GPU utilization remains low, averaging 30–45% (Flexera, 2024). This inefficiency results in significant underused capital annually. The AI Grid Alliance (AIGA) proposes a federated, open-standard AI compute grid to pool unused GPU capacity across organizations, enhancing resource efficiency.

Key Benefits:

Improve access to AI compute resources for smaller organizations

Reduce compute costs by up to 30–45% through improved utilization

Lower energy consumption by 18–35% for batch processing workloads (Appelhans et al., 2023)

Create potential economic value exceeding $1 billion annually by 2030

2. Market Landscape and Economic Opportunity

3. Technical Feasibility

Core Technologies:

Orchestration: Kubernetes-native scheduler Kueue enables cross-cluster batch job queuing, supporting efficient workload distribution (CNCF, 2023).

Security: Confidential VMs, such as those using NVIDIA H100 GPUs or AMD SEV, ensure tenant data integrity in multi-tenant environments (NVIDIA, 2023).

Efficiency: Batch inference can reduce energy use by 18–35% in clusters with significant batch workloads (Appelhans et al., 2023).

Limitations:

Homomorphic encryption is computationally intensive, limiting its practicality for real-time AI workloads.

Real-time inference workloads may face latency challenges in a distributed grid environment.

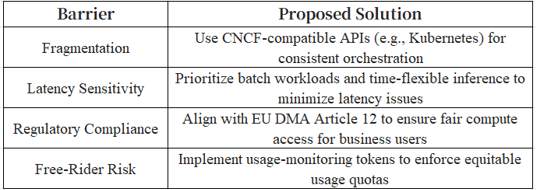

4. Barriers and Solutions

5. Economic Model

Assumptions:

Approximately 2.1M global AI GPUs (Omdia, 2024)

40% average idle rate (derived from 30–45% utilization, Flexera, 2024)

$0.85/hr average spot instance value (based on AWS/GCP spot rates, 2024)

Annual Potential Value:

Total idle GPUs: 2.1M × 40% = 840,000

Usable idle GPUs (assuming 20% availability): 840,000 × 20% = 168,000

Value calculation: 168,000 GPUs × 8,760 hrs/year × $0.85/hr ≈ $1.25B

Net value (after 20% overhead for orchestration and network costs): ≈ $1.0B/year

Note: The model assumes uniform GPU availability and consistent spot pricing, which may vary by region and provider. Overhead estimates are approximate and subject to implementation-specific factors.

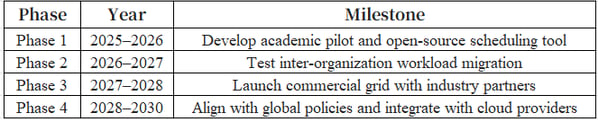

6. Roadmap

7. Call to Action

Who We Need:

Cloud Providers: Contribute excess compute capacity to the grid.

Researchers: Provide AI workloads for testing and validation.

Policy Leaders: Advocate for legislation ensuring fair compute access.

Investors: Fund infrastructure development and open-source tooling.

8. References

Amazon Web Services. (2024). EC2 Spot Instances pricing.

Appelhans, M., et al. (2023). Batch processing efficiency in federated GPU clusters. Energy Efficiency Journal, 16(4)

Cloud Native Computing Foundation. (2023). Kueue: Batch job queueing for HPC.

European Commission. (2022). Regulation (EU) 2022/1925 on contestable and fair markets in the digital sector (Digital Markets Act). Official Journal of the European Union, L265/1.

Flexera. (2024). 2024 State of the Cloud Report.

Gartner. (2024). Forecast: AI Semiconductors, Worldwide, 2022-2028 (ID G00798234).

Google Cloud. (2024). Spot VM pricing for compute engine.

Mordor Intelligence. (2024). GPU as a Service Market - Forecast 2025–2030 (Report 978-1-005-98421-7).

NVIDIA. (2023). Confidential computing for NVIDIA H100 Tensor Core GPUs [Technical brief].

Omdia. (2024). AI Accelerator Market Tracker – Q1 2024 (Report AIA-Q1-2024).

Collaboration

Empowering AI through shared compute resources and efficiency.

© 2025 AI Grid Alliance. All rights reserved.

Email: